Language models are currently top-rated. They often connect basic models like ChatGPT to a vector database. This allows the model to provide contextually meaningful answers to the questions asked.

What Is a Vector Database?

A vector database is a type of database that stores and organizes vector data. It is a treasure trove of data that a language model can use to create improved answers. “The Bitcoin standard is stored in a vector database divided into paragraphs. Ask a question about the history of money using this intriguing new “model.”

The model will query the database, find relevant context from the “Bitcoin Standard,” and use that as input to the underlying model (often ChatGPT). The answer should be more relevant. While this approach has its merits, it needs to address the underlying problems of noise and bias in the underlying training models.

At Spirit of Satoshi, we strive to achieve this goal. A model from this firm was created six months ago and is available for trial. Chatbot lacks conversational skills and has difficulty with knowledge about shitcoins and bitcoin.

Therefore, the firm decided to take a new approach and create a complete language model. This guide will do just that.

A More “Grounded” Version of the Bitcoin Language Model

The search for a more “grounded” language model continues. The level of engagement has exceeded expectations, not because of technical complexity but rather because of redundancy.

Data is everything. It’s not about the quantity of data but the quality and format of the data. You may have encountered discussions among enthusiasts, but genuine appreciation only comes when you start putting data into the model and get unexpected results.

The heart of the operation is in the data pipeline. Collecting and processing the data and then extracting it are necessary steps. Programmatically cleansing the data is required because manual initial cleansing is not possible.

Once the programmatically cleaned raw data is obtained, it needs to be converted into various formats, such as question-answer pairs or semantically coherent fragments and paragraphs. Programmatically processing large amounts of data is crucial for language models. Interestingly, other language models do an excellent job of doing just that! Language models are used to build new language models.

Data Quality Assessment and Cleaning Are Very Important

Then, a thorough cleaning is needed due to unnecessary garbage and irrelevant content created by the language model used to transform the data.

Human help is significant here, as only humans can discern and assess quality in ways other beings cannot. Algorithms have some ability in this area, but they need help with language, especially in complex situations like Bitcoin.

Scaling this task is extremely easy with a large team of experts. An army of people could consist of well-funded mercenaries like OpenAI or dedicated missionaries like the Bitcoin community at Spirit of Satoshi. People look at the data and decide whether to keep it, discard it, or change it.

Having gone through this process, the data is presented to us in its original form. Of course, there are additional challenges. For the cleansing process to go smoothly, it is essential to identify and screen out any malicious attempts to disrupt it.

There are various ways to do this, each with its peculiarities. One option is to screen people as they enter the company and create an internal consensus model to determine which data elements should be retained and which should be discarded. At Spirit of Satoshi, strategies will be implemented in the coming months to measure effectiveness.

Data Formatting Phase

Once clean data comes out of the pipeline, it must be formatted again to train the model.

In the last stage, GPUs take center stage, attracting the attention of those interested in language model building. This tends to ignore other aspects.

This final stage involves training and experimenting with different models by adjusting parameters, mixing data, changing the amount of data, and learning other models. This can be costly, so it is vital to have excellent data. It is recommended to start with miniature models and gradually progress.

This process is purely experimental and gives the final result.

The miracles created by man are genuinely unique. Moving on…

At Spirit of Satoshi, progress is well underway, and the company is working on our results through various methods:

- Volunteers are being sought to help collect and organize the necessary data for the model. This is taking place at The Nakamoto Repository. Welcome to an extensive collection of literary works, including books, essays, articles, blogs, YouTube videos, and podcasts. The repository covers many topics, including Bitcoin and peripheral issues such as Friedrich Nietzsche, Oswald Spengler, Jordan Peterson, Hans-Hermann Hoppe, Murray Rothbard, Carl Jung, and the Bible.

- Search and access various types of content, such as URLs, text files, or PDFs. If any material is missing or needs to be included, volunteers have the right to “add” an entry. Adding unwanted records will result in rejection. Ideally, data should be submitted as a .txt file and a link.

Community members can help clean the data and earn seats. This is the missionary stage. Various tools will allow members to engage in activities such as “FUD buster” and “rank replies.” It resembles a Tinder-like process where you save, discard, or comment to tidy up the pipeline.

This gives those with extensive knowledge of Bitcoin a chance to convert their expertise into sats. While it won’t lead to riches, such opportunities can support meaningful projects and provide some income.

Not Artificial Intelligence, But Rather Probability Programs

Some past works have argued that “artificial intelligence” is a misnomer. It is artificial but does not possess accurate intelligence. Furthermore, the fear associated with general-purpose artificial intelligence (GPI) is unfounded. There is no risk of it becoming spontaneously intelligent and causing harm. After some time has passed, my conviction of this has only strengthened.

Reflecting on John Carter’s insightful article entitled “The Tedium of Generative AI,” one is struck by its accuracy.

Frankly, artificial intelligence lacks magic or intelligence. As interaction and design increase, our realization that there is no consciousness grows. No cognitive processes are taking place. The agency does not exist. These programs are based on probabilities.

Fear, uncertainty, and doubt are primarily associated with the labels and terms used, such as “artificial intelligence,” “machine learning,” or “agents.”

These terms aim to define unique processes significantly different from human actions. Language is challenging because we instinctively humanize it to make sense of it. In the action process, the viewer or listener humanizes Frankenstein’s monster.

The existence of artificial intelligence depends solely on your imagination. Like any other fictional end-of-the-world scenario, this one is no different.

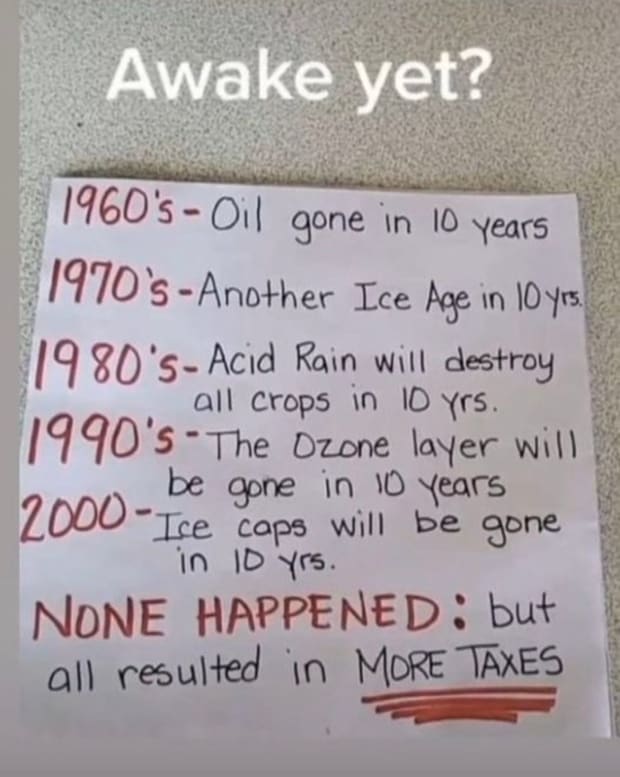

Examples of climate change, aliens, or any other hot topic on Twitter/X.

This is useful for bureaucrats who want to use tools like this to their advantage. From their earliest efforts, they’ve been weaving tales and narratives. Now here’s their new creation. Due to people’s tendency to unthinkingly follow those who seem more intelligent, some are using this to their advantage.

The discussion of impending regulation has become a topic of conversation. Recently, official rules for generative AI have been introduced thanks to our bureaucratic authorities. The true meaning of this remains a mystery to everyone. The regulations are, as always, clothed in ridiculous language. The bottom line is clear: “We dictate the rules and control the tools. You must comply or face the consequences.”

Absurdly, some people triumphed, believing they were now protected from a non-existent monster. These agencies can be credited with “saving us from AGI” because it never happened.

It evokes a familiar feeling.

After posting the picture on Twitter, the author was struck by the number of people who genuinely believe that preventing such disasters is solely due to increased bureaucratic intervention. This revelation shed light on the general collective intelligence present on the platform.

Yet here we are. Once again. Another story, new faces.

Unfortunately, there’s not much we can do about it. Let’s better focus on our own business. The mission remains unchanged.

Enthusiasm for GenAI wanes as people turn their attention to aliens and politics, which is unfortunate. There is less transformational potential than people assumed six months ago. Our assumptions may turn out to be wrong. The tools have untapped potential, but it remains dormant.

There is a need to change the way we view their nature. Instead of artificial intelligence, let’s call them “probabilistic programs.” Such a change could lead to a more practical approach, reducing efforts to realize unrealistic aspirations and emphasizing the development of practical applications. We are left with curiosity and cautious optimism that something will happen in Bitcoin, probabilistic programs, and protocols like Nostr. A helpful innovation may emerge from this bundle.

We hope this information on language model construction has been helpful.